With the shop system Magento, you can quickly and easily create many individual and independent shops, all look different, offer other products, have other prices and especially under their own domains are accessible.

But what with Search Engine Optimization for Google, Bing etc. from, if you have several shops? Since only a common file system used, you need to employ a few tricks here.

- In each case, create a sitemap.xml per Store:

Magento can default sitemap.xml only the creation of THE. There is no (standard) This possibility to rename (more info HERE). Sure there are extensions for, which the tasks quickly and comfortably, But there is another way.

One can each sitemap.xml but put in different folders.

Thus, we place in the Magento root directory one directory per shop to sample:

– sitemap-shop1

– sitemap-shop2

– sitemap-shop3

Then we go in the Magento backend to catalog > Google Sitemap.

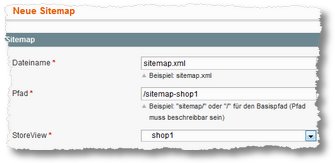

There we click on “Add Sitemap”, give as Name sitemap.xml, then enter the path and then look for the corresponding store view from. Then on “Save & Draw up” click.

The sitemap has now been created and should better look-- are in the correct folder. - Configure, that the sitemaps are automatically updated:

To automatically update the Magento Sitemaps (must the Magento Cron be enabled on the server side!), man man under System > Configuration > Catalog / Google Sitemap and go there to deposit the Sitemap eg. is created daily. - Adjust, that Google and other bots will also find the correct sitemap = the appropriate robots.txt create

Now a general problem(chen). Normally you also only creates a robots.txt for a page. Google und Co. So expect this robots.txt (even if they would they do not necessarily need). For a multi-shop we needed but strictly speaking several robots.txt, the u.a. tell where the sitemap is. Therefore, we create e.g..

– robots-shop1.txt

– robots-shop2.txt

– robots-shop3.txt

In these files we store on one side, for example,. a standard part, or to keep the search engines from certain pages:User-agent: * Disallow: /index.php/ Disallow: /*? Disallow: /*.js$ Disallow: /*.css$ Disallow: /*.php$ Disallow: /admin/ Disallow: /app/ Disallow: /catalog/ Disallow: /catalogsearch/ Disallow: /checkout/ Disallow: /customer/ Disallow: /downloader/ Disallow: /js/ Disallow: /lib/ Disallow: /media/ Disallow: /newsletter/ Disallow: /pkginfo/ Disallow: /report/ Disallow: /review/ Disallow: /skin/ Disallow: /var/ Disallow: /wishlist/

and add as the last line

Sitemap: http://www.shop1.de/shop1/sitemap.xml

in addition. We do this for all shops and change each below the domain, indicating the location of the respective sitemap.xml. So we have so after three robots files, all within the Magento root directory.

Is still missing a last step. - About the. Htacces defining robots is responsible for which domain:

For this, we open the. Htaccess in the root directory of magenta and add at the end the following lines to## What robots for which domain ## RewriteCond %{HTTP_HOST} ^.*?shop1.de$ [NC] RewriteRule ^robots.txt$ robots.shop1.txt RewriteCond %{HTTP_HOST} ^.*?shop2.de$ [NC] RewriteRule ^robots.txt$ robots.shop2.txt RewriteCond %{HTTP_HOST} ^.*?shop3.de$ [NC] RewriteRule ^robots.txt$ robots.shop3.txt

This should work now everything. The bots come to the page, see about that. htacces, that they should keep to the respective robots, this refers to the sitemaps in the subdirectories and thus is beautifully indexed and allocated to the respective shops and domains of multistores.

Used in Magento Version 1.8.1.0 Comments? Additions? Notes? Gladly!

Wer das Thema über Extensions abbilden möchte: http://store.cueblocks.com/xml-sitemap-plus-generator-splitter.html and http://store.cueblocks.com/cueblocks-robots-txt.html sei empfohlen.

Google rät übrigens davon ab JS und CSS auszusperren.

(vgl. http://googlewebmastercentral.blogspot.de/2014/05/rendering-pages-with-fetch-as-google.html)

Weiterhin lässt sich die Zuordnung der Sitemaps zu einem der Multistores sauber über die .htaccess abbilden, damit immer die passende /sitemap.xml ausgeliefert wird.

(vgl. http://oldwildissue.wordpress.com/2012/02/27/magento-google-sitemap-generation-for-multistore-installation/)

Leider muss man dann auch unterschiedliche robots.txt ausliefern, da

Sitemap:eine “volle” URL benötigt.(vgl. http://www.sitemaps.org/protocol.html#submit_robots)

Aber der Aufwand lohnt sich wohl, im alle /sitemap.xml Anfragen abzufangen und sich zusätzliche Extensions zu sparen.

Gruß